AI Bitcoin Price Predictions

What Works, What Fails, and How to Read Them

A Byte & Block deep dive into the world of AI BTC forecasts — minus the prophecy theater.

AI says Bitcoin is going to… where exactly?

If you’ve opened social media or news publications lately, you’ve seen the headline format:

These posts explode because they offer two things people crave in volatile markets: certainty and speed.

The problem? Most of them blur very different systems into one bucket called “AI,” then present outputs like guarantees.

That’s not analysis. That’s narrative compression.

This guide does three things:

1. separates the major AI prediction types,

2. shows where they genuinely help,

3. and gives you a practical framework so you can read these calls without getting farmed by confidence.

Why these headlines keep winning anyway

Let’s be honest: “AI predicts BTC range under uncertainty” won’t outperform “AI says $250K next.”

Simple, emotional, directional content wins feeds.

Three reasons this format keeps working:

1) Certainty sells

Humans prefer a wrong clear answer over a nuanced probabilistic one when stress is high.

2) Authority transfer

People borrow trust from the word “AI,” even when the output came from a generic prompt with no robust methodology.

3) Fast shareability

A single number is easier to repost than a conditions-based framework.

So yes, these headlines are click-efficient. That does **not** mean they’re decision-useful.

What “AI prediction” actually means (and why mixing them is a mess)

Most discussions lump all AI calls together. Big mistake.

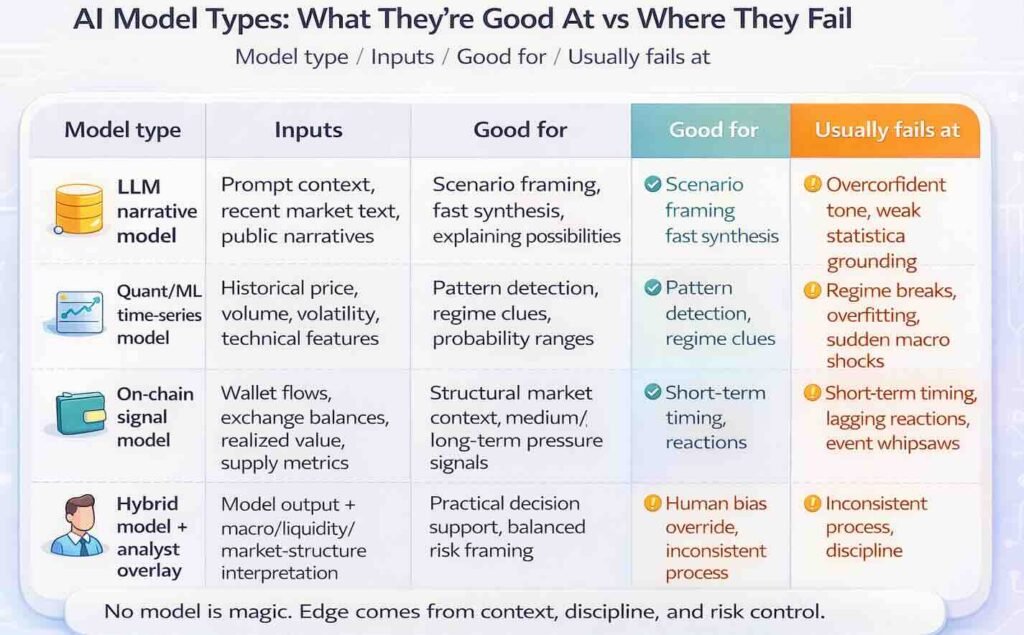

Type 1: LLM narrative forecasts

What it is: A language model generating directional text from prompt context.

Strength: Great at scenario storytelling and synthesis.

Failure mode: Sounds confident even when confidence is not justified; can mirror current narrative too heavily.

Type 2: Quant/ML time-series models

What it is: Models trained on historical price/vol/volume features.

Strength: Can detect recurring statistical patterns and regime tendencies.

Failure mode: Overfitting and regime breaks; looks brilliant until the environment changes.

Type 3: On-chain signal models

What it is: Model outputs based on wallet behavior, flows, supply metrics, realized values, etc.

Strength: Better structural context than pure price-only systems.

Failure mode: Timing can lag; strong long-term signal, weak short-term precision.

Type 4: Hybrid systems (model + analyst overlay)

What it is: AI output interpreted through macro/liquidity/structure lens by humans.

Strength: Usually most practical in real trading/investing settings.

Failure mode: Human bias can override model discipline.

Bottom line: when someone says “AI predicted BTC,” your first question should be:

Which AI, trained on what, evaluated how?

Reality check: how often do public AI predictions actually work?

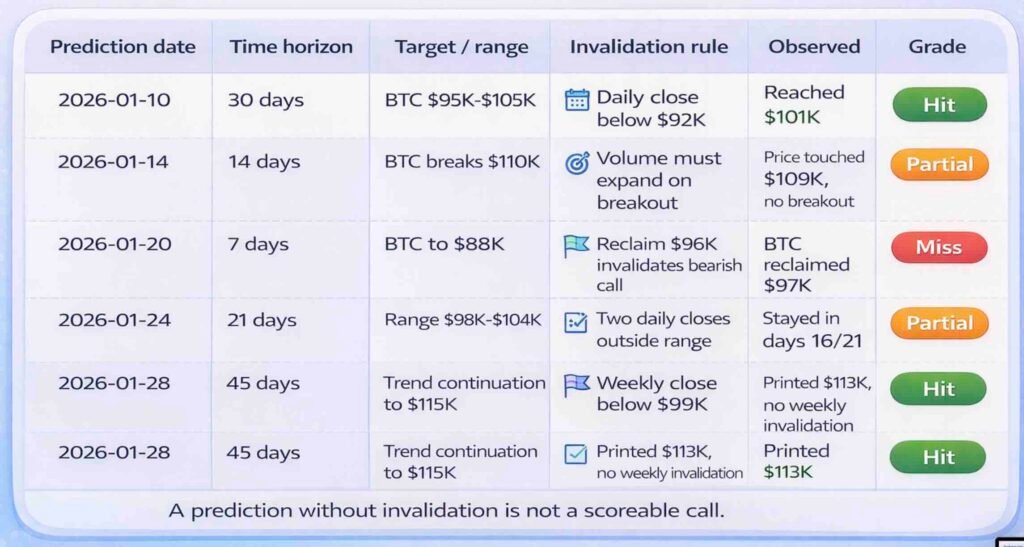

The dirty secret: many public prediction posts are judged with fuzzy scorekeeping.

A target hit briefly? Claimed as “correct.”

- A timeline missed by months? Quietly ignored.

- A wide range? Retrofitted as success.

If you want honesty, score calls like this:

No invalidation = not a prediction, just vibes.

No horizon = not testable.

No log of misses = marketing, not analysis.

Where AI *does* help (if you use it correctly)

Here’s the part most people miss: AI can be genuinely useful — just not as an oracle.

1) Scenario mapping

AI is great for producing structured bear/base/bull paths with key assumptions.

2) Regime labeling

It can help classify market conditions (trend, chop, squeeze-prone, macro-sensitive).

3) Signal clustering

It can summarize many indicators into decision-friendly buckets faster than manual workflows.

4) Risk framing

It can produce probability-weighted ranges that are more useful than single-point moon targets.

If you treat AI as a planning engine, it can improve decisions.

If you treat it as a crystal ball, it will eventually punish you.

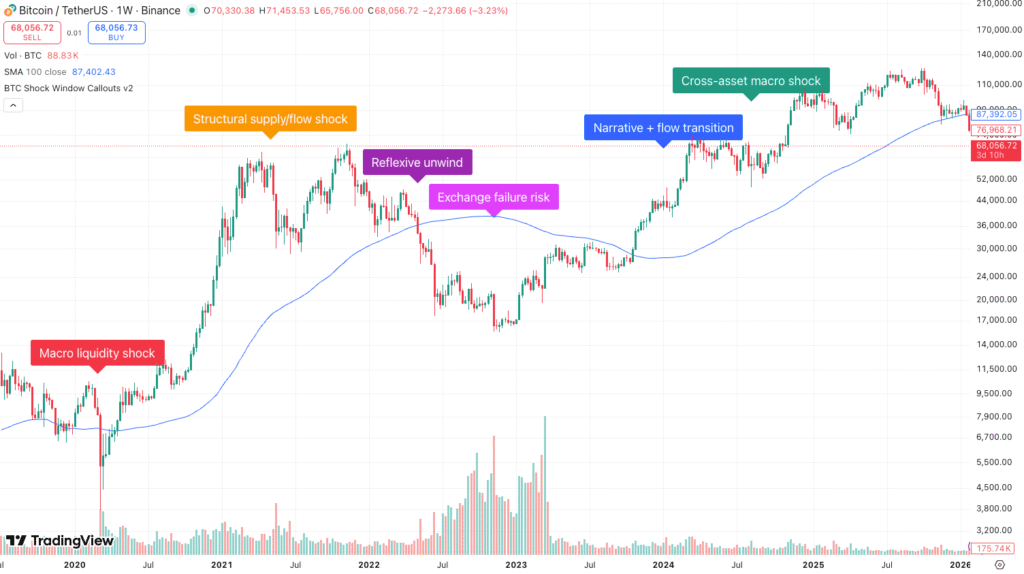

Where AI breaks (and usually breaks hard)

Even strong models have predictable blind spots.

Mar 2020 — Macro liquidity shock: Persistence-style models underreacted to regime break.

May–Jun 2021 — Structural supply/flow shock: China mining ban + deleveraging broke trend extrapolation.

May–Jun 2022 — Reflexive unwind: LUNA/3AC cascade rapidly shifted vol and correlation regime.

Nov 2022 — Exchange failure risk: FTX collapse was idiosyncratic, off-model event risk.

Jan 2024 — Narrative + flow transition: ETF-driven positioning reset reduced prior-cycle reliability.

Aug 2024 — Cross-asset macro shock: JPY carry unwind risk-off move lagged crypto-only models.

Turning points

Models trained on persistence tend to underreact to inflection events.

Macro shocks

Surprise CPI prints, policy pivots, liquidity stress, geopolitical headlines — these can invalidate model assumptions instantly.

Reflexivity loops

If enough traders front-run the same “AI level,” the level becomes crowded and unstable.

Regime shifts

A model tuned in one volatility/liquidity regime can degrade fast in another.

This is why model confidence should fall when market structure changes — not rise.

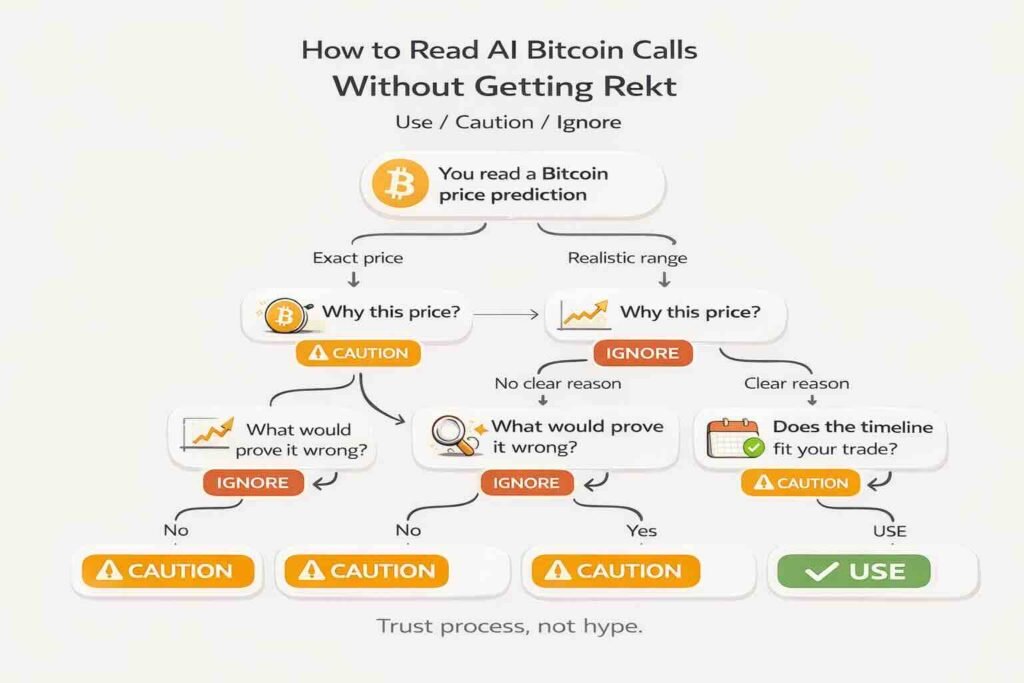

Practical framework: how to read AI BTC calls without getting rekt

Use this quick filter before you trust any Bitcoin prediction you see online:

1) Is it giving one exact price, or a realistic range?

If it only gives one big number (like “$250K soon”), treat it carefully.

Serious analysis usually gives a range and conditions.

2) Does it explain *why* this could happen?

If there’s no reasoning behind the call, there’s no edge.

3) Does it say what would prove it wrong?

A useful forecast includes a clear “this fails if X happens” line.

No invalidation = not testable.

4) Does the timeline fit your decision?

A long-term forecast should not drive a short-term trade.

5) Does current market behavior support the claim?

Check if price action, volume, and liquidity actually line up.

6) Are you assuming the model can be wrong?

Model error is normal. Position size should reflect that.

7) Does the source track bad calls too?

If someone only shows wins and hides misses, trust should drop.

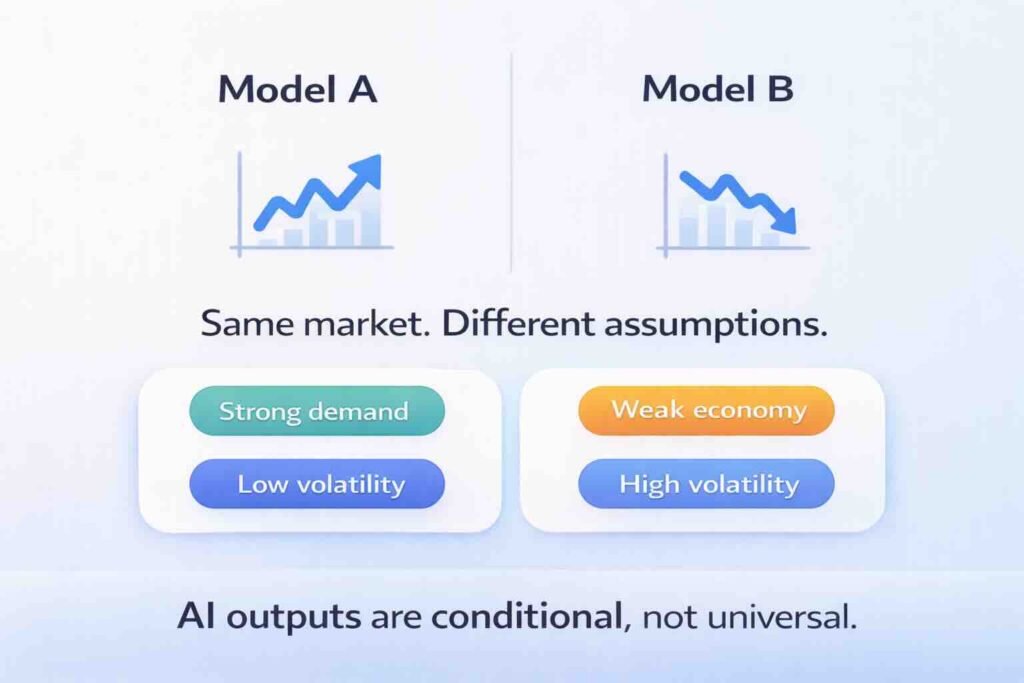

Mini case: same market, opposite AI outputs

You can feed similar data to different AI stacks and get opposite conclusions:

- Model A: “trend continuation likely”

- Model B: “mean reversion probability elevated”

Both can be internally logical because they optimize different objectives and windows.

That’s not proof AI is useless.

That’s proof model outputs are conditional tools, not universal truth.

The edge comes from understanding context and selecting the right tool for the job.

So… are AI Bitcoin predictions reliable?

Short answer: reliable enough for structured scenarios, unreliable as deterministic price prophecy.

If you force a yes/no:

- As risk-planning tools: useful.

- As headline target machines: dangerous.

The smartest way to use AI in crypto is boring but effective:

- generate scenarios,

- stress-test assumptions,

- define invalidation,

- then let market structure confirm or reject.

That approach won’t go viral.

It will keep you solvent longer.

Final word

AI won’t replace market uncertainty.

It just gives you faster ways to organize it.

So treat AI calls like weather models:

- useful for planning,

- dangerous when read as destiny.

In crypto, confidence is cheap.

Calibration is alpha.

☕ Byte & Block out.

💬 What’s Next

Up next on Byte & Block:

- “The Tokenization Boom: How Real-World Assets Are Coming On-Chain”

Follow @byte_and_block for bite-sized insights, or subscribe to the newsletter for deeper dives.

Subscribe to our newsletter!

Telegram Bot

Telegram Bot